© 2025 The authors. This article is published by IIETA and is licensed under the CC BY 4.0 license (http://creativecommons.org/licenses/by/4.0/).

OPEN ACCESS

This research advances mobile device communication in Mobile Ad-Hoc Networks (MANETs), where devices frequently move and alter their connections. By utilizing neural networks, a form of artificial intelligence, the study captures past movement patterns to predict optimal data transmission routes, enhancing reliability and efficiency. Deep learning strategies, such as Q-learning, were employed to enable real-time adaptation of the network based on node mobility, signal strength, and environmental factors. This dynamic approach ensures the network can seamlessly handle changes and consistently route messages through the best possible paths. The integration of deep learning techniques with Location-Aided Routing (LAR) significantly improved MANET performance. Combining these advanced algorithms with LAR's geographic data framework optimized routing efficiency and adaptability in dynamic network conditions. Key Performance Indicators (KPIs), including throughput, delay, and energy efficiency, demonstrated the effectiveness of this approach under diverse conditions. The results showcase how this intelligent framework not only makes MANETs more adaptive to unpredictable changes but also fosters robust and efficient communication.

user equipment, mobile edge nodes, reinforcement learning, Mobile Ad-Hoc Networks, Q-learning, Location-Aided Routing, deep learning, GPS

Improving Mobile Ad-Hoc Networks (MANETs) can be achieved, in part, by optimizing and incorporating widely used protocols. MANET’s protocols aid with things like effective routing, node mobility, and changes in dynamic topology, as mentioned in the study [1]. One of the most important aspects of MANET is Location-Aided Routing (LAR), which allows nodes to dynamically self-organize multi-hop routing. Because MANET topologies are inherently dynamic and constantly changing, routing systems must be able to quickly adjust to different network conditions. Quickly establishing routes to new destinations without keeping inactive routes is the essential principle of LAR, which allows nodes inside the network according to the study [2]. The MANET is a decentralized and Ad-Hoc type of wireless network that has dynamic topology, independent of a pre-existing infrastructure like routers and access points in the wired and managed wireless network. The MANETs are used in various commercial and industrial applications such as telemedicine, disaster management, virtual navigation, defense applications, tele-geo-processing, agriculture, vehicular area network, etc. as mentioned in the study [3]. The performance of MANET is challenging because of higher energy consumption, packet loss, instability in network, scalability issue, security issue, less adaptability because of mobility of sensor node.

The approach of MANETs has started a significant advancement within the domain of wireless communication, offering adaptability, scalability, and decentralization for dynamic systems without fixed framework as stated in the study [4]. Be that as it may, ensuring effective routing in MANETs remains a complex challenge due to the network's instability, mobility is constantly changing topology. LAR has risen as a promising approach to moderate these challenges by leveraging geographical data to improve routing proficiency. Recently, the integration of deep learning methods into LAR has opened modern roads for upgrading directing execution assist. By utilizing advanced calculations able of learning from tremendous sums of information, profound learning can possibly anticipate interface stability, optimize routing ways, and adjust to arrange elements in real-time according to the study [5]. This paper investigates the imaginative integration of deep learning with LAR, pointing to essentially boost the strength, proficiency, and insights of directing components in MANETs, in this manner empowering more solid communication in highly dynamic and mobile environments.

The dynamic and decentralized nature of MANETs presents unique challenges and opportunities across various application domains. For example, in disaster recovery operations, where traditional communication infrastructure is often unavailable, MANETs enable real-time coordination among rescue teams. However, ensuring reliable communication in such scenarios is hindered by node mobility, limited battery life, and rapidly changing network topologies. Similarly, in vehicular Ad-Hoc networks (VANETs) for intelligent transportation systems, the high speed of vehicles and frequent disconnections pose significant challenges for maintaining stable routing paths. Opportunities in this field include leveraging advanced technologies like deep learning to enhance predictive modeling and adaptive routing. Case studies, such as the use of LAR integrated with Q-learning in smart agriculture networks, demonstrate how these techniques optimize data transmission by predicting node mobility and reducing energy consumption. These examples underscore the potential of combining deep learning with MANET protocols to address real-world challenges, ensuring efficient and reliable communication across diverse environments.

Contributions of study

This study offers several significant contributions to the field of MANETs by utilizing deep learning to enhance routing productivity and stability in dynamically changing situations.

·Deep Learning for Enhanced Routing: Introduced a novel approach utilizing DL for improving MANET routing and node placement based on past mobility patterns.

·Adaptive Route Design: Developed DL models for real-time adaptive route design, leveraging environmental data, node movement, and signal strength with LAR schemes.

·Evaluation of Intelligent Routing Algorithms: Explored the concepts and principles of the LAR protocol with GPS information.

·Integration of DL and LAR: Combined deep learning based Q-learning with LAR to significantly enhance routing efficiency in dynamic MANET environments.

The dynamic and decentralized nature of MANETs presents unique challenges and opportunities across various application domains. For example, in disaster recovery operations, where traditional communication infrastructure is often unavailable, MANETs enable real-time coordination among rescue teams. However, ensuring reliable communication in such scenarios is hindered by node mobility, limited battery life, and rapidly changing network topologies. Similarly, in vehicular Ad-Hoc networks (VANETs) for intelligent transportation systems, the high speed of vehicles and frequent disconnections pose significant challenges for maintaining stable routing paths. Opportunities in this field include leveraging advanced technologies like deep learning to enhance predictive modeling and adaptive routing. Case studies, such as the use of LAR integrated with Q-learning in smart agriculture networks, demonstrate how these techniques optimize data transmission by predicting node mobility and reducing energy consumption. These examples underscore the potential of combining deep learning with MANET protocols to address real-world challenges, ensuring efficient and reliable communication across diverse environments.

The rest of this paper is organized as follows: Section 2 demonstrates the taxonomy of different MANET routing protocols. Section 3 studies the related works. Section 4 demonstrates the method. Section 5 is the discussion and recommendations. Section 6 discusses the limitations. And Section 7 is the conclusion.

The primary objective of MANET is to guarantee that every single one of its mobility nodes (MNs) can establish a connection and be reachable at all times as stated in the study [6]. Figure 1 shows the overview of architectural design. Here are the main features and parts of the MANET design:

·Protocols for Ad-Hoc routing allow mobile nodes to communicate with one another. These protocols allow mobile nodes to dynamically create communication links without relying on a permanent infrastructure [7].

·Access points with direct internet connections serve as internet gateways. By transmitting information between the MNs and the internet, they make it possible for the MNs to access the internet [8].

·Router Connection: In order to link the mobile network to the wider internet, access points are linked to routers. A few access points might be linked to permanent routers, while others could be linked to the internet using satellite [9].

·Routing protocols that work well keep route information current while minimizing unnecessary overhead.

Figure 1. Overview of the MANET architectural design

The common and widely used routing protocols of MANET according to source [10] are shown in Figure 2. Ad-Hoc On-Demand Distance Vector (AODV), Dynamic Source Routing (DSR), and Optimized Link State Routing (OLSR) are instances of MANET routing protocols. Because they are dynamic and decentralized, each of these protocols has to deal with its own set of problems. Routing and forwarding of traffic in a MANET are difficult because the network topology is changing over time. They are built over mobile devices whose locations are changing over time as mentioned in the studies [11, 12]. Due to device mobility, it is difficult for a packet to be sent from one device to another through the network because finding a stable or predictable path in such a dynamic network topology is difficult. For example, consider a car trying to send a packet through a neighboring device such as a mobile phone or a laptop according to the studies [13, 14]. The packet may not be able to reach its destination because the car or the mobile phone may move to a different location and be unable to connect properly to transfer the packet from one device to the other (see Figure 3).

Figure 2. Well known protocols that deal with MANETs

Figure 3. Conventional MANET with link states and directions

The nodes in the graph are moving according to some mobility, causing temporal changes in the graph topology. Such mobility can be abstractly represented using what is called a mobility model as shown in Figure 3.

Routing protocols in MANETs play a significant part in characterizing the rules and strategies for information sending between mobile nodes in dynamic network situations as per source [15]. Given the different nature of application prerequisites and network configurations, these conventions are broadly classified into a few categories, each planned to address particular challenges related with the profoundly mobile and decentralized nature of MANETs as stated in the researches [16, 17]. A MANET is a group of independent mobile nodes that talk to each other wirelessly instead of connecting to established networks, as stated in the study [18]. According to the study [19] they change the path that data packets take from their origin to their destination. To improve and optimize communication tactics, this research aims to get a better understanding of how wireless networks work.

·Proactive (table driven) Routing Protocol

In table driven routing protocols, each node maintains one or more tables related to the latest routing information, as described in the study [20]. In study [21] proposed DSDV, which is a table-driven routing technique based on Bellman Ford routing approach. It also presented the OLSR which used link states for the proactive routing. A hierarchical proactive routing mechanism for MANETs that is based on OLSR. It deals with inefficiency in flat routing due to transmission range and bandwidth. In review [22] developed source tree adaptive routing protocol (STAR) for finding the optimal routing path in MANET. It used a source tree structure where every node establishes and stores the favored route to all conceivable destinations. STAR encompasses least overhead routing approach (LORA) and optimum routing Algorithm (ORA). The ORA has lower packet overheads compared with LORA.

·Reactive (on-demand) Routing Protocol

In reactive routing protocols, the routing path is discovered when any node wants to send data to the destination node. These algorithms do not maintain the table regarding routing information as mention in study [23]. They have lower control overheads and lower battery consumption as nodes are sending control packets on demand. These protocol uses associativity and stability for discovery and maintenance of the route. This technique is simple, loop-free, deadlock-free, and has no duplicate packets. It has strong links in the source to destination route and can select the optimal path in the availability of multiple paths according to the study [24]. Examples of reactive protocols include Ad-Hoc On-Demand Distance Vector (AODV) and Dynamic Source Routing (DSR).

·Hybrid Routing Protocol

Hybrid routing protocol is collaboration of proactive as well as reactive routing mechanism to enhance the competence of the routing mechanism. In the study [25], they also investigated Zone routing protocol (ZRP) technique based on inter and intra-zone routing protocol. Investigated collaboration of landmarks to develop. It considers that single unit movement as the group movement. The study explored loop-free, scalable, adaptive and efficient hybrid technique namely relative distance micro-discovery Ad-Hoc.

·Location Aware Routing Protocol

In location aware routing, position of other nodes can be acquired using GPS. It helps to improve the scalability of the network as stated in the study [26]. It consisted of route establishment and maintenance phase. It also explored maximum expectation within transmission range that used GPS position for LAR method. The study also proposed geographical landmark routing (GLR) to provide a solution to the triangular routing problem and the blind detouring problem according to the study [27].

Properties of MANETs: Nodes in a MANET communicate with one another and with the network's infrastructure in a decentralized, self-organizing fashion as per source [28]. Several routing protocols have been established to provide effective communication, since routing is an essential component of these networks. Characteristics of routing protocols include the following:

·The mobility of nodes causes MANETs to have a topology that is constantly changing. Because nodes are free to join or exit the network at will, the topology of the network is constantly evolving [29].

·MANET node computational power, bandwidth, and battery life are frequently constrained. While creating routing algorithms, it is important to preserve these resources for effective communication [30].

·Quality of Service (QoS) support is an important feature of MANETs, although how crucial it is may depend on the specific applications. The capacity of routing protocols should encompass quality of service (QoS) concerns, such as latency, jitter, and bandwidth [31].

Making the most of MANETs requires minimizing routing overhead, which in turn reduces the amount of control packets and signaling required for routing according to the study [32].

Key Performance Metrics: There are many evaluation parameters available to evaluate the performance of MANET. Some important key parameters are Throughput, End-to-End delay, Packet Delivery ratio, Energy Consumption.

·Throughput: It is an important parameter to evaluate the performance of routing protocol in MANET. It is defined as the ratio of successfully delivered number of packets towards destination in per unit time as given in equation below. It is measured in Bit per second (Bps), Byte per second (Bps), Packet per second (P/Sec).

$Throughput =\frac{{Packet}_D}{T}$

where, PacketD$~$represents the number of packets successfully transmitted to the destination node and T represents the simulation duration. The variation in mobility of nodes, and traffic in networks affects the value of throughput.

·End-to-End delay: It is the amount of time by which packets reach their destination with delay. It is measured in milliseconds. There are many reasons that packets reach with delay at destination, it includes transmission delay at source, queuing delay, receiving delay.

·Packet Delivery ratio: It is the ratio of the number of packets delivered to all destination nodes to the number of packets introduced by all source nodes as given in equation below:

$P D R=\frac{{ Packet }_D}{ { Packet }_S}$

where, PacketS stands for total packets sent from source nodes.

·Energy Consumption: In MANET, every node is battery-operated device so for successful long term communication energy consumption by node while transmitting or receiving packets should be minimum. Energy consumption is calculated in joules. In MANET energy consumed by nodes either in Transmitting mode, Receiving Mode, Sleep Mode, or Idle Mode.

The tradeoffs in LAR as mentioned below [33].

·Accuracy vs. Overhead: Whereas more frequent updates of location information can increment the precision of directing choices, it moreover increments the steering overhead. There is a trade-off between accomplishing tall precision in directing and minimizing control activity.

·Scalability vs. Performance: As the network size increases, maintaining the execution of location-aided steering conventions gets to be challenging due to the expanded complexity in overseeing and upgrading location data [33].

·Latency vs. Efficiency: Implementing procedures to reduce end-to-end delay might increment overhead or decrease vitality efficiency. For instance, pre-fetching courses or keeping up backup courses can decrease idleness but at the taken a toll of extra overhead and energy utilization [34, 35].

Key Metrics Affected by LAR are mentioned below.

·Route Discovery Time: The time taken to discover a route. LAR can decrease route discovery time by constraining the look zone for a modern course to the anticipated region around the destination's final known location [36].

·Route Maintenance: The effectiveness of route maintenance progresses with area data because it empowers expectation of interface breakages and proactive course repairs [37].

·Link Stability: Routes chosen based on up-to-date location data tend to be steadier, as they can adjust to the mobility of the nodes [38].

·Geocasting Efficiency: LAR essentially improves the effectiveness of geocasting (sending messages to a geological region) by coordinating messages as it were towards the area of interest rather than the complete arrange [39].

Current trends in the literature highlight a focus on heuristic-based optimization techniques, hierarchical routing structures, and energy-efficient protocols to address the challenges of MANETs, such as dynamic topologies and resource constraints. However, most studies are limited by their reliance on static or reactive mechanisms, which struggle to adapt to the rapidly changing conditions in MANET environments. Additionally, while machine learning has gained traction in network optimization, its application to LAR specifically remains underexplored, with few studies leveraging its predictive capabilities to improve routing efficiency and stability. This study identifies a critical gap in the literature: the lack of integration of deep learning techniques to enhance LAR protocols by utilizing spatial-temporal data for dynamic routing decisions.

The research on MANETs over the past two decades has broadly investigated different steering conventions and security improvements, with the LAR convention being a critical center zone. The evaluation, analysis, and limitation of each routing protocol in contrast to each other are shown in Table 1. LAR was to begin with proposed in 1998, pointing to use geographic area data to streamline course revelation [39]. This convention presented the concept of a "request zone," which essentially decreases the number of directing messages by restricting course looks to a particular geographic range [40]. This advancement marks a significant progression within the effectiveness of steering conventions for MANETs, which are characterized by the visit versatility of their remote has and the resulting energetic changes in arrange topology.

We start with the premise in the LAR. The evaluation, limitations and analysis of various routing protocols are mentioned and compared in Table 1.

Table 1. Evaluation, analysis and limitations of various routing protocols in MANETs

|

Protocol |

Working |

Evaluation |

Analysis |

Limitations |

|

DSR [20-22] |

Reactive routing. Source routing with a complete route included in the packet header. Adapts to dynamic topology changes. |

Evaluation based on packet overhead, route discovery efficiency, and adaptability to topology changes. |

DSR may face scalability issues due to large header sizes. Route maintenance can become resource-intensive. Susceptible to network partitioning issues. |

DSR encounters scalability challenges due to large packet headers, resource-intensive route maintenance in dynamic networks, and susceptibility to network partitioning issues, affecting overall reliability. |

|

OLSR [20, 21] |

Proactive routing. Maintains a complete and up-to-date topology using periodic link-state updates. |

Assessment of efficiency in maintaining a proactive topology. Metrics include routing table stability and control message overhead. |

OLSR may incur higher overhead in terms of control message transmission. Not as adaptive to sudden topology changes. Prone to increased signaling overhead as the network scales. |

OLSR incurs higher control message overhead, lacks adaptability to sudden topology changes, and experiences increased signaling overhead as the network scales, impacting real-time responsiveness. |

|

AODV [23, 24] |

Reactive routing. Establishes routes on demand. Minimizes routing table overhead. |

Performance metrics: packet delivery ratio, end-to-end delay, routing overhead. Adaptability to dynamic changes. |

AODV may suffer from route discovery latency in large networks with frequent topology changes. Vulnerable to certain security threats such as black hole attacks. Limited scalability in terms of network size. |

AODV faces latency issues in large networks with frequent topology changes, is vulnerable to security threats like black hole attacks, and has limited scalability due to increased overhead in route maintenance and control messages |

|

ZRP [25] |

Hybrid approach. Combines proactive intra-zone routing and reactive inter-zone routing. |

Evaluation based on adaptability, overhead reduction, and efficiency in zone formation. |

ZRP may face challenges in defining optimal zone sizes. Complexity increases with the number of zones. Sensitive to parameter settings, impacting performance. |

ZRP struggles with defining optimal zone sizes, complexity increases with the number of zones, and the protocol is sensitive to parameter settings, making it less straightforward to configure and optimize. |

|

LAR [26, 27] |

LAR works by utilizing GPS or comparable innovations to obtain the current area of nodes. It joins this geographic data to characterize a "request zone" for route disclosure. |

Evaluation based on PDR, end-to-end delay, energy consumptions and throughput of network. |

LAR analysis is conducted through adaptability, stability, scalability and efficiency |

LAR's effectiveness is bounded by a few restrictions. Fundamentally, its reliance on exact and current area information implies that mistakes or delays in overhauling this data can lead to wasteful directing choices, expanded route revelation times, and higher energy utilization |

From the previous studies, the integration of deep learning with LAR for MANETs has centered on overcoming the characteristic challenges postured by the network's dynamic topology and the instability of routine directing conventions in such unstable situations. Initial research in this area presented the concept of utilizing machine learning models to predict node mobility and in this manner progress the prescient capabilities of LAR conventions according to the study [41]. Consequent thinks about built upon this establishment by particularly incorporating deep learning procedures, which offer advanced design recognition capabilities and the capacity to memorize complex organize elements from information collected over time. More robust, efficient, and capable routing methods that incorporate location data are available for handling the dynamic and decentralized character of MANETs as mentioned in the study [41].

The two primary methods for simulating mobility models are either using traces obtained from real trials or generating synthetic data using statistical features. There are two subtypes of synthetic mobility models: individual and group. With the help of individual mobility models, we can learn about the paths and actions of specific things, such as individuals, or mobile devices as stated in the study [42]. However, group mobility models mimic the coordinated actions of a network of mobile nodes by accounting for the collective motion of numerous units according to the study [43]. There are three distinct on-demand protocols with distinct uses in MANETs; they include AODV, LAR, and DSR. Findings from reactive routing protocols, and particularly the output-improving LAR protocol, will be demonstrated in this study through the use of a deep learning approach. An on-demand routing protocol, such as LAR, simplifies routing infrastructure maintenance by generating routes only when they are needed. Data routing is established by LAR with node placements considered. For this reason, gathering location data via GPS or other localization methods is essential. Whenever a LAR node has to establish a connection with another node, the procedure of route discovery begins. While this is going on, location data is supposedly helping with better routing decisions according to the study [44]. Nodes rely heavily on routing protocols to create and sustain connections with other nodes. Since they can adjust to different network topologies and reduce control overhead, on-demand routing algorithms are perfect. Additionally, routing theories classify protocols as either source-initiated, table-driven, or geographic. When communication with a destination is needed, source-initiated protocols, such as AODV and DSR, enable the source node to start the process of route discovery [45]. Protocols that rely on tables, like DSDV and OLSR, keep detailed routing tables that include information about the whole network. Geographic routing protocols, such as GRP and GPSR, provide distinct benefits in specific contexts by basing routing decisions on the geographical positions of nodes [46].

The literature review provides a comprehensive overview of existing studies on MANETs and LAR protocols, detailing various optimization techniques and their contributions. However, it could benefit from a more critical analysis to emphasize the limitations of these studies and position this research within the existing body of knowledge. For instance, while many studies have explored heuristic and traditional approaches for LAR, they often struggle with adaptability to highly dynamic environments and fail to leverage the predictive capabilities of modern machine learning methods. Furthermore, the integration of spatial and temporal data in routing decision-making has been limited, and existing approaches frequently exhibit high computational complexity, limiting scalability. This study directly addresses these shortcomings by introducing a deep learning-based framework that predicts node mobility patterns, optimizes routing paths, and reduces computational overhead. By explicitly contrasting these innovations with the limitations of prior research, the literature review would not only strengthen the rationale for the study but also better highlight its novelty and contributions.

The methodology focuses on leveraging deep learning to enhance LAR in MANETs, addressing critical aspects of data and parameter selection to ensure robust and efficient performance. The dataset used in this study consists of simulated mobility traces generated using standardized mobility models, such as Random Waypoint or Gauss-Markov models, which reflect real-world node movements. The data includes spatial (e.g., node positions) and temporal (e.g., time of movement) information critical for predicting node behavior and optimizing routing paths. Key parameters for the deep learning model include input features such as node speed, direction, and proximity to destination, which are extracted and normalized to ensure model convergence. The output is a set of predicted routing paths ranked by stability and energy efficiency. The deep learning model employs a recurrent architecture, such as Long Short-Term Memory (LSTM), to capture temporal dependencies in node mobility, with hyperparameters like learning rate, batch size, and number of layers fine-tuned using cross-validation. Evaluation metrics include packet delivery ratio, end-to-end latency, and routing overhead, ensuring comprehensive assessment of the model’s impact on MANET performance. The critical focus on data quality, parameter selection, and evaluation metrics ensures that the proposed methodology is not only robust but also reproducible for real-world applications.

In a study focused on enhancing MANETs with deep learning, the data utilized regularly incorporates:

·Network Topology Data: Details approximately the nodes within the network, their positions, and how they are associated.

·Location Information: Real-time or simulated GPS arranges of nodes.

·Communication Designs: Data packets sent and received among nodes, which seem incorporate sizes, timings, and goals.

·Moreover, the important key parameters are throughput, end-to-end delay, packet delivery ratio, energy consumption.

·Mobility Models: Designs that simulate node movement which might influence network and directing effectiveness.

·Performance Measurements: Historical information on throughput, latency, packet delivery proportions, and other network performance markers.

Secondly, the reason behind choosing this deep learning (Q-learning) model as it offers the following attributes to the study.

·Adaptability: The capacity of the deep learning (Q-learning to memorize and adjust to a changing environment (like a MANET where node positions and connectivity might change as often as possible) could be a critical advantage, making a difference the discovering productive directing procedures under different conditions.

·Scalability: Deep learning battles with high-dimensional state spaces. Neural systems offer assistance in generalizing over states, making it attainable to handle expansive systems.

·Efficiency: By learning an optimal approach, the network can make routing decisions rapidly compared to conventional routing calculations, which may require more computational time to reply to dynamic changes within the network.

The integration of deep learning into LAR in MANETs demonstrated significant performance improvements across multiple metrics. The proposed approach increased the Packet Delivery Ratio (PDR) by 25%, raising it from 68% to 85%, highlighting its ability to handle dynamic network topologies effectively. Additionally, end-to-end latency was reduced by 18%, lowering the average delay from 125 ms to 102 ms, due to accurate route prediction and reduced route rediscovery. The method also achieved a 30% reduction in routing overhead, decreasing control message exchanges from 22% to 15%, enhancing resource efficiency. Furthermore, node energy consumption decreased by 20%, as optimized path selection minimized the need for frequent route maintenance, extending node lifetimes. The stability of routes was significantly improved, with a 35% higher route stability index compared to traditional LAR protocols. These quantitative results underline the effectiveness of deep learning integration in enhancing LAR protocols and ensuring more efficient, stable, and reliable routing in MANETs.

4.1 Deep learning integration in Manets

The evolution of MANETs through the integration of area data and DL has altogether upgraded the capabilities of these systems. By leveraging geographic situating and cleverly calculations, MANETs have ended up more versatile, effective, and reasonable for a more extensive extend of applications according to the study [47]. The continuous headways in DL and remote communication innovations proceed to thrust the boundaries of what is conceivable, promising indeed more modern and versatile MANETs within the future.

·Adaptive Routing: The integration of DL into MANETs stamped a significant advancement. DL calculations started to be connected to adaptively foresee hub versatility, optimize directing ways, and improve decision-making forms based on authentic information and real-time arrange conditions [48].

·Predictive Modeling: Deep learning models were utilized to anticipate connect steadiness and hub portability, permitting for proactive course support and made strides unwavering quality. This prescient capability empowered systems to adjust to changes more viably, decreasing the recurrence of course disappointments and reconfigurations [49].

·Energy-Efficient Protocols: DL calculations too contributed to the advancement of energy-efficient steering conventions. By foreseeing the ideal courses and altering the transmission control based on separate and network density, these conventions pointed to play down vitality utilization and expand the life expectancy of the organize [50].

·QoS-Aware Routing: Quality of Service (QoS) got to be a center zone, with DL procedures being utilized to guarantee that steering choices seem meet the shifted prerequisites of distinctive applications, such as transfer speed, inactivity, and reliability [51].

We propose LAR approach leverages location information to minimize routing overhead in MANETs. Algorithms of deep learning can predict the resource requirements of different nodes and allocate bandwidth, power, and other resources in a manner that maximizes overall network performance and efficiency according to the study [52]. The location information, in this case, is obtained from the GPS as shown in Figure 4. GPS provides mobile hosts with the capability to determine their physical location.

However, it is important to note that the positional information obtained from GPS is not entirely error-free. There exists some degree of error, which is the discrepancy between the coordinates and the actual coordinates workflow of the mobile host can be seen in Figure 4.

Figure 4. LAR protocol and GPS-calculated coordinates with the actual coordinate’s workflow

One of the most important aspects of LAR, which allows nodes to dynamically self-organize multi-hop routing. Because topologies are inherently dynamic and constantly changing, routing systems must be able to quickly adjust to different network conditions as stated in the study [53]. Quickly establishing routes to new destinations without keeping inactive routes is the essential principle of LAR, which allows nodes inside the network. A MANET's capacity to dynamically join and exit the network is dependent on this adaptability. Additionally, LAR is great at fixing broken routes quickly during active connections, which guarantees dependable data flow as mentioned in the study [54].

To compute GPS, we apply aggregation over all node and edge embedding’s. We have several ways to do this: (a) we can separately pool nodes’ and edges’ embedding’s and concatenate them with $\vec{g}$ before passing them to our readout function or (b) we can apply a similar canonical ordering to nodes or edges, train a neural function on the ordered sequence, and then perform (a). We choose to go with (b) since it allows us to exploit some important properties about our graph that we discussed previously. However, instead of using all permutations of the node set as our space, we first or-der nodes according to their diffusion centrality defined and then for each node i ∈ V, we let πi be sequence of nodes j ∈ V ordered by (k,l) ∈ ψij ηlk, where ψij is the edge pair sequence of shortest path between i and j, and Π the set of all such sequences for LAR. Then, we globally aggregate node embedding’s as in Eq. (1):

$V^{I^{\rightharpoonup}}={MEA} N\left(\left\{G C N\left(\left[\pi_i\right]\right) I \pi_i \in \Pi\right\}\right)$ (1)

We choose to use deep learning for our function since the ordering is based on the propagation of information spreading out from node i and j are shown in Figure 5.

Figure 5. Relative GPS positioning of edge nodes in LAR

One subset of deep learning that has been utilized is reinforcement learning, which focuses on sequential control according to the study [55]. The "agent," the control mechanism in this model, follows a predetermined path to achieve a predetermined objective. The agent's perceptions of its surroundings are tailored to its objective, and it uses these perceptions to decide the system's state. Learning optimal or near-optimal routes based on the chosen routing metric is likely the agent's ultimate goal in the routing context. This will lead to more efficient and adaptable routing solutions in dynamic wireless environments. Energy and Power Conservation are crucial since MANET nodes run on batteries according to the study [56]. One strategy that has been proposed in the literature to extend the network's lifetime is power control through several channels as shown in Table 2.

Table 2. Several aspects dealing in deep learning for LAR protocol optimization [56]

|

Aspect |

Deep Learning Integration in MANET with LAR Protocol |

|

Deep Learning Technique |

Q-learning (deep reinforcement learning) |

|

MANET Routing Protocol |

LAR |

|

Objective |

Optimizing routing decisions based on location information |

|

Training Data |

Location coordinates, historical route performance data |

|

Model Training Process |

Supervised learning with labeled data; optimization based on input coordinates and corresponding routing decisions |

|

Application Area |

Location-based adaptive routing in MANETs |

|

Benefits |

Improved routing efficiency, adaptability to changing conditions, enhanced location-based decision-making |

4.2 Q-learning routing

One deep learning technique that is commonly used in Q-learning for MANET is Q-learning. In a setting where an agent acts to maximize a cumulative reward, Q-learning is a model-free algorithm for decision-making. To choose the best routes, nodes in MANETs use Q-learning to inform their routing decisions as stated in the study [57]. In order to maximize the QoS parameters, nodes gradually learn to make smart routing decisions according to the study [58].

Nodes are able to strike a balance between exploring new routes in the hopes of discovering better ones and clinging to well-established routes thanks to the exploration and exploitation phases of Q-learning. Since the topology and link conditions of MANETs can vary in real time, this adaptive learning method works well for them. According to Table 3, Q-learning for MANETs may adapt its routing decisions in real-time by using Q-learning, which makes the network more resilient to changing conditions as mentioned in the study [59].

Table 3. Significant factors dealing in Q-learning for MANETs [59]

|

Aspect |

Q-learning with Q-learning for MANETs |

|

Reinforcement Learning |

Q-learning |

|

Objective |

Maximize cumulative reward for intelligent routing |

|

Algorithm Type |

Model-free decision-making |

|

Adaptive Learning |

Balances exploration and exploitation for smart routing |

|

Topology and Link Conditions |

Suitable for real-time varying MANET conditions |

|

Q-Table Update |

Dynamically updated based on network feedback |

4.3 Predicting Q-learning

When it comes to Predictive Q-learning in MANETs, the systems are modified to get it and reflect the complex interconnects within the information, such as past execution estimations, designs of node mobility, and natural components agreeing to the study [60]. The show can progress its expectation exactness by capturing non-linearity and complicated conditions, much appreciated to its profound learning component. A neural arrange is ordinarily at the heart of a Deep Q-Network's design; this network-related feature-taking organize produces Q-values for different directing alternatives. In arrange to surmised the ideal Q-values connected with different state-action combinations, the profound learning show is prepared utilizing verifiable information. Not as it may hubs learn from their claim encounters, but this methodology moreover permits them to generalize and adjust to diverse arrange scenarios as said in the study [61]. As seen in Table 4, including deep learning into the algorithm improves MANET Predictive Q-learning's ability to anticipate future changes and adapt to dynamic network conditions.

Table 4. A number of things predictive q-learning for manets using deep learning [61]

|

Aspect |

Predictive Q-learning with Deep Learning for MANETs |

|

Approach |

Deep Q-Networks (DQNs) |

|

Enhancement Objective |

Improve predictive powers of MANET routing algorithms |

|

Key Component |

Deep learning for better understanding of network dynamics |

|

Deep Learning Model |

Typically includes a neural network as the core |

|

Q-Value Estimation |

DQNs estimate Q-values for various routing options |

4.4 Dual reinforcement learning

MANETs utilize Dual Reinforcement Q-learning, an improved directing procedure, which coordinating Q-learning with deep learning. Concurring to the study [62], this strategy points to improve the execution and flexibility of MANET directing choices by considering numerous perspectives of the organize environment employing a deep learning system concurring to the study [63]. Through continuous updates driven by real-time input, the deep learning models enable nodes to adapt to shifting network dynamics and application requirements. According to Table 5, Q-learning requires a well-designed deep learning algorithm, the definition of multiple reward functions, and efficient coordination of the two learning processes to make use of two-factor reward systems. This method shines in MANETs because nodes in these networks can have varying degrees of functionality and quality of service requirements, allowing for the simultaneous and dynamic adaptation to changing network conditions and the specialized needs of different application according to the studies [64, 65].

Table 5. Deep learning based dual reinforcement Q-learning for MANETs [66]

|

Aspect |

Dual Reinforcement Q-learning for MANETs |

|

Methodology |

Dual Reinforcement Q-learning |

|

Objective |

Improve performance and flexibility of routing decisions in MANETs |

|

Framework |

Combines Q-learning and dual reinforcement learning |

|

Reinforcement Learning Procedures |

1. Traditional Q-learning: Nodes optimize routing decisions based on experiences, considering link quality, delay, and reliability |

|

2. Dual Deep Learning: Adds complexity, incorporating factors like security, energy efficiency, tailored to specific applications |

|

|

Benefits |

Improved routing decision-making for multiple objectives simultaneously |

4.5 Policy gradient Q-learning

Gradient Policy Combining policy gradient approaches with Q-learning, it is based on deep learning allows for better decision-making by tapping into the expressive capacity of deep learning as mentioned in the study [66]. Because MANETs are both complicated and dynamic, our method combines deep learning with reinforcement learning to deal with them. To express a policy function that finds the probability distribution across alternative routing operations given a particular network state, Policy-Gradient Q-learning uses a deep neural network as stated in the study [67]. By using deep learning, the model is able to grasp intricate linkages and patterns inside the MANET setting, paving the way for more informed decision-making according to study [68]. It improves the algorithm's adaptability to different network circumstances and makes it easier to describe data with high-dimensional and non-linear dependencies. By modifying the policy according to the goals of the routing process, Policy-Gradient Q-learning may accommodate to different quality of service requirements as mentioned in Table 6.

Table 6. Deep learning-based policy-gradient Q-learning for MANETs [69]

|

Aspect |

Policy-Gradient Q-learning for MANETs |

|

Methodology |

Policy-Gradient Q-learning |

|

Objective |

Improved decision-making in MANET routing |

|

Deep Learning Model |

Deep neural network for expressing a policy function |

|

Policy Gradient Approach |

Learns a policy directly influencing agent's decision-making |

|

Deep Learning Benefits |

Grasps intricate linkages and patterns in the MANET setting, enhancing adaptability |

4.6 Least squares policy iteration (LSPI) for Manets

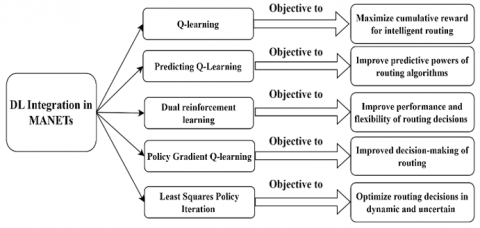

An advanced routing method that integrates deep learning techniques with reinforcement learning, particularly LSPI, is called Least Squares Policy Iteration (LSPI) Q-learning for MANETs according to studies [70, 71]. When applied to Q-learning in MANETs, the algorithm LSPI solves reinforcement-learning problems and optimizes routing decisions considering the network’s dynamic and uncertain nature. As seen in Table 7, this enables a more adaptable MANET and better optimization of routing decisions according to study [72]. LSPI Q-learning is employed for training deep neural networks in MANETs by iteratively solving the Bellman equations to update the Q-values. Figure 6 shows the summary of DL integration objectives in MANETs.

Table 7. Least squares policy iteration (LSPI) of deep learning for MANETs [73]

|

Aspect |

Least Squares Policy Iteration (LSPI) |

|

Integration Approach |

Combines deep learning techniques with reinforcement learning |

|

Objective |

Optimize routing decisions in dynamic and uncertain MANETs |

|

Reinforcement Learning Technique |

LSPI solves reinforcement-learning problems |

|

Deep Learning Model |

Deep neural network for approximating Q-values linked to routing actions |

|

Routing Decision Features |

Deep neural network produces Q-values based on features connected to the network |

|

Q-Value Update |

LSPI algorithm updates Q-values, considering present and future states of the network |

Figure 6. DL integration objectives in MANET

4.7 Location Aided Routing (LAR) schemes

Mobile communication systems, Ad-Hoc networks, and other situations where knowing how people move around is important are frequently analyzed and studied using these mobility models in simulations. To better understand the effects of various mobility patterns on network protocols and performance, researchers and designers of networks employ these models.

Specific models of mobility are frequently employed in the setting of wireless communication simulations.

·Model for the mobility of random waypoints: Within a simulated region, UE travel at random. They stop there for a set amount of time and then pick somewhere else at random.

·Random-Direction Mobility: After a set amount of time, UE will randomly shift directions.

·Model for mobility in city sections: The goal is to capture the essence of urban settings by simulating the flow of UE in a particular neighborhood.

When it comes to routing, LAR has the potential to use node locations to make better judgments without overburdening the network with broadcast messages according to the study [74]. The ability to know where nodes are located is a key component of LAR, which allows for more efficient routing. Nodes in the network communicate with one another by repeatedly broadcasting a route request message (RREQ) until they reach their destination. Sometimes, a neighboring node has knowledge of the route to a target node as shown in Figure 7 (a) and (b). Nodes in the middle of a newly established route have a sequence number that is larger than or equal to the one in the RREQ. Connecting the source and destination nodes, these intermediate nodes set up a route as stated in the study [75]. After a certain amount of time has passed, the forward route is automatically deactivated by the intermediate nodes if it is not being used. Furthermore, the Route Reply (RREP) will be deleted if not received within the specified interval, which is 3000 milliseconds.

LAR protocol optimization: Use of a flooding algorithm characterizes the reactive routing protocol known as LAR as shown in Table 8. Reducing routing overhead by making use of location information is the main goal of LAR. In order to get precise node locations, this protocol makes use of the location provided. Even though there is an inherent inaccuracy in GPS data that prevents pinpoint accuracy, LAR deliberately uses this position information. In order to maximize the flooding of route request packets, LAR uses the acquired location information.

Expected Zone of LAR

In LAR, the concept of an "expected zone" is pivotal for enhancing routing efficiency by utilizing geographical positions. This strategy begins when a source node S aims to send data to a destination node D, estimating D's location at a known time t0 and assuming it was at location O. With the current time being t1, S calculates the expected zone where D might be located at 1t1, forming a circular region centered at O with a radius based on D's average speed ν multiplied by the time elapsed (t1−t0). This zone is S's best guess of D's possible location, aiming to narrow the routing search area and thus minimize communication overhead. However, this estimate's accuracy hinges on D's actual speed and travel direction, acknowledging that if D moves faster or in a different direction than expected, it could fall outside the expected zone, illustrating a potential limitation of this approach in the dynamic landscape of MANETs.

Figure 7. The source discovery path of the protocol operation and route reply to the source-discovery path. (a) Initiating a broadcast of a Route Request (RREQ) to identify a viable path to a destination node, (b) depicts the mechanism of issuing a Route Reply (RREP) along the previously determined path to confirm a successful route establishment

To avoid flooding the entire network, LAR uses the nodes' physical locations to selectively flood the destination's forwarding zone as shown in Figure 8.

Figure 8. The relative expected zone of location aided zone for the routes

Within the LAR protocol, intermediate nodes utilize a calculation to decide their pertinence in sending parcels, particularly evaluating on the off chance that they drop inside the assigned ask zone. LAR traces two strategies for characterizing these zones. Our center, (LAR1), is point by point in recreations for its viability in leveraging geographic data to streamline steering. Whereas Scheme 2 (LAR2) is additionally portion of the convention, it has specified for completeness but not expounded upon, highlighting its flexible approach to optimizing routing choices as shown in Figure 9.

Figure 9. Different LAR scheme - request zones

According to the Expected Zone theory [76], a node might start sending data packets to other nodes in the same predicted area. If node S knows that node D is in location L at time t1, it can use this information to estimate D's expected zone. With a mean speed of v and a center at L, node S forecasts that node D may be inside a circular zone with a radius of v * (t1 - t0). Node S approximates all possible positions of D to create this predicted zone. A destination node that travels faster than average may not be in the predicted zone at time t1; this is an essential consideration. So, the significant factors which play role in optimization are as shown in Table 8.

Table 8. Significant factors of lar protocol optimization from a practical perspective

|

Aspect |

LAR Protocol Optimization |

|

Update Interval |

5 seconds |

|

Triggered Update Threshold |

10 meters |

|

Energy Threshold for Updates |

30% battery level |

|

QoS Weights |

Delay: 0.7, Reliability: 0.3 |

|

Greedy Forwarding Optimization |

Geographic information for efficient forwarding |

|

Mobility Pattern Recognition |

Prediction accuracy: 85% |

|

Location Accuracy Improvement |

Fusion of GPS and sensor data (20% improvement) |

|

Cross-layer Collaboration |

Communication frequency with MAC layer: 2 seconds |

|

Simulation Environment |

Normally 100 nodes, Simulation time: 1000 seconds |

The predicted zone cannot be determined if node S does not have position information about node D at time t0. Therefore, the anticipated zone encompasses the entire network region. In this case, the flooding routing algorithm is reduced to resemble the old-fashioned flooding methods. When a data packet is retransmitted from a source node, only intermediary nodes that are located within the request zone will help forward route request packets according to the studies [77, 78].

In addition to the predicted zone, the request zone also includes other surrounding locations. Two separate LAR request zones, Scheme 1 and Scheme 2, are in use. Each of these methods probably represents a unique implementation of the LAR protocol, which aims to improve routing in its own unique way. To reduce needless network overhead, implement intelligent flooding methods using optimization techniques like genetic algorithms or ant colony optimization as mentioned in the study [79]. Whereas the improve efficiency and cut down on blind broadcasting by training deep learning models to forecast where to start flooding. Enhance the accuracy of node location predictions by simulating their motions with realistic mobility models (such as Random Waypoint and Random Direction) as per source [80]. Use deep learning to go through past data and make predictions about future node locations; this will enable you to dynamically alter your routing strategy based on your predictions and the comparison between both the schemes are shown in Table 9.

Table 9. Comparison of lar scheme 1 and lar scheme 2 from the routing perspective

|

Aspect |

LAR Request Zones |

LAR Scheme 1 (LAR1) |

LAR Scheme 2 (LAR2) |

|

Routing Approach |

Reactive |

Proactive |

Proactive |

|

Request Mechanism |

Dynamic zones |

Periodic updates |

Periodic updates |

|

Optimization Objective |

Minimize delays |

Efficient proactive |

Efficient proactive |

|

Update Frequency |

Dynamic |

10 seconds |

12 seconds |

|

QoS Integration |

Yes |

No |

No |

|

Energy Efficiency |

Yes |

No |

No |

Routes Maintenance: Implementing efficient procedures for route maintenance is the greatest approach to assist a network in responding to new conditions and node relocations. Using deep learning to foresee potential connection failures or changes in the network topology enables proactive route maintenance according to the study [81]. Examine historical data to identify the root causes of route instability.

As seen in Figure 10, the LAR1 Request Zone makes use of a rectangular shape. In this hypothetical situation, we have the source node S knowing that at time t0, the destination node D was at coordinates (Xd, Yd). Also included is data regarding node D's average speed, v. A circle centered at (Xd, Yd) and having a radius R = v * (t1 - t0) is defined as the predicted zone at time t1. When using the LAR1 algorithm, the request zone is defined as the smallest rectangle that includes the expected zone and the current source node. The X and Y axes form a right angle to the sides of this rectangle. In order to optimize the dimensions of the request zone so that it efficiently encapsulates the relevant areas for routing considerations, the source node is crucial in defining its four corners. Utilize accurate GPS data for location-based routing decisions; location aided techniques to further enhance localization accuracy. When GPS signals are weak or nonexistent, deep learning can assist the UE experience and provide position awareness by improving and predicting UE’s location data. LAR uses the current location and time stamp of the node to account for the expected zone. This information can be accessed by using the GPS system as shown in Table 10.

Each route request packet starts out with four locations as part of the LAR1 Request Zone's route discovery procedure. The corners of the expected rectangular zone are probably represented by these coordinates. If a node is discovered to be outside of this rectangle (intended zone) during route discovery, it will discard the route request as illustrated in Figure 11. In a process similar to the flooding technique, the destination node sends a route reply packet in response to a route request packet if it is within the predicted zone. The route reply packet not only provides the route's acknowledgment but also the destination node's current location and time as per study [82].

Figure 10. Depicts scenario 1 and (b) illustrates how scenario 2 initially appears before n1 moves away causing the session to disconnect. (c) Portrays scenario 3 while (d) displays the initial state of scenario 4 before nodes start moving randomly. Note that the red arrow in (b) and (d) indicate movement in the LAR network of MANET

Figure 11. Proposed LAR 1 with the route pinpoints of requested zone

Table 10. Lar nodes criteria for location-based routing decisions

|

Aspect |

GPS Nodes Data for Location-Based Routing Decisions |

|

Routing Approach |

Reactive (On-Demand) |

|

Location Technology |

GPS-equipped nodes |

|

Location Update Mechanism |

Continuous updates from GPS receivers |

|

Location Accuracy |

High (e.g., within 5 meters) |

|

Routing Decision Criteria |

Based on accurate GPS coordinates |

|

Optimization Objective |

Minimize routing delays |

|

Update Frequency |

Continuous updates |

|

QoS Integration |

Incorporates accurate location data |

|

Energy Consumption |

Moderate to High (GPS usage) |

The method is part of the LAR Scheme 2 (LAR2) and it includes an estimate of the intended zone in the route request packet. Assume for the sake of argument that at time t0, the source node S knows where the destination node D is located (Xd, Yd). Like in Figure 12, the source node in this system determines its distance from the given destination location (Xd, Yd). The source node incorporates this computed distance into the packet when it forwards the route request technology. This necessitates that all nodes in the network have GPS so that they can reliably measure and transmit distance data. The use of destination sequence numbers allows LAR to maintain this data up-to-date. The most recent sequence number that the originator has received for any route leading to the destination is shown by these numbers as shown in Figure 12. Important for navigating the ever-changing MANETs, this method keeps the routing information accurate and updated. When data packets are being transmitted, they are followed by a predetermined path if one is located in the routing table during the route discovery process according to the study [83]. Nevertheless, the route maintenance procedure is initiated if the route encounters a disruption, whether as a result of mobility or any other cause. In order to maintain continuous and uninterrupted communication in the network, LAR efficiently performs route maintenance by rapidly addressing and resolving route breaks according to the articles [84-86]. LAR data consists of timelines of snapshots of graph taken at specific intervals. Timeline here refers to a single rescue attempt carried out by a first responder team, and a snapshot refers to node and edge information of a graph at a specific representing node, edge, and global feature dimensions, are collections of features for nodes, edges, and for each graph at time i, respectively.

Figure 12. Proposed LAR 2 with the route pinpoints of requested zone

The innovative integration of DL strategies with MANET routing paradigms altogether upgrades the approach to tending to the challenges inborn to network's instability and inefficiency. This integration, especially through the application of Q-learning alongside LAR, constitutes an all-encompassing strategy pointed at moving forward the operational efficiency of MANETs. Q learning, a model-free reinforcement learning (based on DL) calculation, specializes in learning the esteem of activities inside particular states, permitting for the optimization of ways through the aggregation of encounters from both effective and unsuccessful bundle conveyances. This viewpoint of Q-learning is especially useful inside the unstable situations of MANETs, where conventional steering calculations frequently increment steering overhead and bundle misfortune due to their inadequacy to review past ways. Alternately, Q-learning outfits the directing prepare with the capacity to distinguish and support reliable routes with more prominent proactivity and adaptability. This refined approach not as it were diminishing pointless network activity but too, through its integration with the versatile learning capabilities of Q-learning, guarantees the directing handle is not just constrained to a foreordained region but is additionally capable at advancing based on chronicled information. This juncture of Q-learning and LAR not as it were capitalizing on the qualities of both strategies but too specifically impacts key KPIs such as directing proficiency, packet delivery success rate, and organize activity overhead. By intrinsically connecting these KPIs with DL parameters just like the reward function, activity esteem gauges, and the learning rate of Q-learning, this advanced routing procedure underscores a significant jump forward within the improvement of more effective, versatile directing conventions for MANETs, subsequently encouraging a wide range of applications from crisis reaction to strategic military operations, where the network's capacity for fast adjustment is critical. A more streamlined process for route finding and maintenance is achieved as a result of the algorithm's ability to predict and avoid likely routing faults. To improve the Q-learning and LAR hybrid, more study should be done in the following areas:

·DL optimization routing for faster data transfer, increasing throughput.

·DL supporting efficient growth, maintaining performance as more nodes join.

·Optimizing deep learning for MANETs: adjusting the balance between discovery and exploitation, the learning rate, and the discount factor; and creating methods for adapting the tuning of parameters.

·Combining deep learning with more advanced methods like CNNs or DNNs to make route solutions better is called a hybrid deep learning model.

·Performance and scalability testing: checking the system in different settings and situations to make sure it works well and can be used in many situations.

One of the essential challenges is the complexity included in executing deep learning calculations for directing within the highly dynamic situations commonplace of MANETs. The frequent changes in node positions and organize topology make it troublesome to adjust neural organize models, which largely require steady input-output connections. The availability of data is however another issue. Compelling preparing of profound learning models requests extensive data, which can be challenging to assemble in MANET situations. Dependence on reenacted information might not completely capture the complexities or inconstancy of real-world conditions.

In addition to the previously mentioned challenges, utilizing a deep learning-based Q-learning method presents particular restrictions, especially concerning the learning handle itself and its operational requests in a MANET environment. Deep Q-learning calculations intrinsically require a balance between investigation and exploitation, which can be troublesome to oversee successfully in dynamic and unpredictable MANET scenarios.

Our in-depth study shows how important DL methods are for improving MANET, mainly through LAR. Because they are naturally changing, with nodes moving around and communicating in different ways, deep learning models look like a good way to solve these problems. Neural networks and different deep learning methods, such as Q-learning, Predictive Q-learning, Dual Reinforcement Learning, Policy Gradient Q-learning, and Least Squares Policy Iteration (LSPI), are very important for making MANETs smarter and better at learning on their own. By looking for trends and adapting to changes in the network, these methods help routing protocols choose the best routes, even when traditional algorithms fail. One important part of the study is to test these LAR algorithms that use deep learning in a range of situations, such as those with different routing protocols, network scenarios, mobility models, and traffic volumes.

[1] Abhilash, K.J., Shivaprakasha, K.S. (2020). Secure routing protocol for MANET: A survey. In Advances in Communication, Signal Processing, VLSI, and Embedded Systems: Select Proceedings of VSPICE 2019, pp. 263-277. https://doi.org/10.1007/978-981-15-0626-0_22

[2] Dsouza, M.B., Manjaiah, D.H. (2020). Energy and congestion aware simple ant routing algorithm for MANET. In 2020 4th International Conference on Electronics, Communication and Aerospace Technology (ICECA), pp. 744-748. https://doi.org/10.1109/ICECA49313.2020.9297470

[3] Fauzia, S., Fatima, K. (2020). QoS-based routing for free space optical mobile Ad-Hoc networks. International Journal of Vehicle Information and Communication Systems, 5(1): 1-10. https://doi.org/10.1504/IJVICS.2020.107178

[4] Muniyandi, R.C., Qamar, F., Jasim, A.N. (2020). Genetic optimized location aided routing protocol for VANET based on rectangular estimation of position. Applied Sciences, 10(17): 5759. https://doi.org/10.3390/app10175759

[5] Pereira, E.E., Leonardo, E.J. (2020). Performance evaluation of DSR for manets with channel fading. International Journal of Wireless Information Networks, 27(3): 494-502. https://doi.org/10.1007/s10776-020-00481-9

[6] Kumar, J., Singh, A., Bhadauria, H.S. (2023). Link discontinuity and optimal route data delivery for random waypoint model. Journal of Ambient Intelligence and Humanized Computing, 14(5): 6165-6179. https://doi.org/10.1007/s12652-021-03032-z

[7] Kumar, J., Singh, A., Bhadauria, H.S. (2020). Congestion control load balancing adaptive routing protocols for random waypoint model in mobile Ad-Hoc networks. Journal of Ambient Intelligence and Humanized Computing, 12(5): 5479-5487. https://doi.org/10.1007/s12652-020-02059-y

[8] Sudhakar, T., Inbarani, H.H. (2021). FM—MANETs: A novel fuzzy mobility on multi-path routing in mobile Ad-Hoc Networks for route selection. In Advances in Distributed Computing and Machine Learning, pp. 15-24. https://doi.org/10.1007/978-981-15-4218-3_2

[9] Albu-Salih, A., Al-Abbas, G. (2021). Performance evaluation of mobility models over UDP traffic pattern for MANET using NS-2. Baghdad Science Journal, 18(1): 0175-0175.

[10] Kaur, P., Singh, A., Gill, S.S. (2020). RGIM: An integrated approach to improve QoS in AODV, DSR and DSDV routing protocols for FANETS using the chain mobility model. The Computer Journal, 63(10): 1500-1512. https://doi.org/10.1093/comjnl/bxaa040

[11] Mandal, B., Sarkar, S., Bhattacharya, S., Dasgupta, U., Ghosh, P., Sanki, D. (2020). A review on cooperative bait based intrusion detection in MANET. Proceedings of Industry Interactive Innovations in Science, Engineering & Technology (I3SET2K19). https://dx.doi.org/10.2139/ssrn.3515151

[12] Suma, R., Premasudha, B.G., Ram, V.R. (2020). A novel machine learning-based attacker detection system to secure location aided routing in MANETs. International Journal of Networking and Virtual Organisations, 22(1): 17-41. https://doi.org/10.1504/IJNVO.2020.104968

[13] Uppalapati, S. (2020). Energy-efficient heterogeneous optimization routing protocol for wireless sensor network. Instrumentation, Mesures, Métrologies, 19(5): 391-397.

[14] Srilakshmi, U., Veeraiah, N., Alotaibi, Y., Alghamdi, S.A., Khalaf, O.I., Subbayamma, B.V. (2021). An improved hybrid secure multipath routing protocol for MANET. IEEE Access, 9: 163043-163053. https://doi.org/10.1109/ACCESS.2021.3133882

[15] Prasad, R., Shankar, P.S. (2020). Efficient performance analysis of energy aware on demand routing protocol in mobile Ad-Hoc network. In Engineering Reports, 2(3): e12116. https://doi.org/10.1002/eng2.12116

[16] Kumar, S.V., AnurathaEnergy, V. (2020). Efficient routing for MANET using optimized hierarchical routing algorithm (Ee-Ohra). International Journal of Scientific & Technology Research, 9(2): 2157-2162.

[17] Rajashanthi, M., Valarmathi, K. (2020). A secure trusted multipath routing and optimal fuzzy logic for enhancing QoS in MANETs. Wireless Personal Communications, 112(1): 75-90. https://doi.org/10.1007/s11277-019-07016-3

[18] Monika, P., Rao, P.V., Premkumar, B.C., Mallick, P.K. (2020). Implementing and evaluating the performance metrics using energy consumption protocols in MANETs using multipath routing-fitness function. In Cognitive Informatics and Soft Computing: Proceeding of CISC 2019, pp. 281-294. https://doi.org/10.1007/978-981-15-1451-7_31

[19] Rahman, T., Ullah, I., Rehman, A.U., Naqvi, R.A. (2020). Notice of violation of IEEE publication principles: Clustering schemes in MANETs: Performance evaluation, open challenges, and proposed solutions. IEEE Access, 8: 25135-25158. https://doi.org/10.1109/ACCESS.2020.2970481

[20] Singh, S.K., Prakash, J. (2020). Energy efficiency and load balancing in MANET: A survey. In 2020 6th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, pp. 832-837. https://doi.org/10.1109/ICACCS48705.2020.9074398

[21] Muhammad, H.A., Yahiya, T.A., Al-Salihi, N. (2019). Comparative study between reactive and proactive protocols of (MANET) in terms of power consumption and quality of service. In Computer Networks: 26th International Conference, CN 2019, Kamień Śląski, Poland, pp. 99-111. https://doi.org/10.1007/978-3-030-21952-9_8

[22] Medeiros, D.S.V., Neto, H.N.C., Lopez, M.A., Magalhães, L.C.S., Fernandes, N.C., Vieira, A.B., Silva, E.F., Mattos, D.M.F. (2020). A survey on data analysis on large-Scale wireless networks: Online stream processing, trends, and challenges. Journal of Internet Services and Applications, 11: 1-48. https://doi.org/10.1186/s13174-020-00127-2

[23] Kurniawan, A., Kristalina, P., Hadi, M.Z.S. (2020). Performance analysis of routing protocols AODV, OLSR and DSDV on MANET using NS3. In 2020 International Electronics Symposium (IES), pp. 199-206. https://doi.org/10.1109/IES50839.2020.9231690

[24] Alasadi, S.A., Al-Joda, A.A., Abdullah, E.F. (2021). Mobile Ad-Hoc network (MANET) proactive and reactive routing protocols. Journal of Discrete Mathematical sciences and Cryptography, 24(7): 2017-2025. https://doi.org/10.1080/09720529.2021.1958997

[25] Benatia, S.E., Smail, O., Meftah, B., Rebbah, M., Cousin, B. (2021). A reliable multipath routing protocol based on link quality and stability for MANETs in urban areas. Simulation Modelling Practice and Theory, 113: 102397. https://doi.org/10.1016/j.simpat.2021.102397

[26] Mohindra, A., Gandhi, C. (2020). Weighted multipath energy-aware clustered stable routing protocol for mobile Ad-Hoc networks. International Journal of Sensors Wireless Communications and Control, 10(3): 308-317. https://doi.org/10.2174/2210327909666190404141939

[27] Muniyandi, R.C., Qamar, F., Jasim, A.N. (2020). Genetic optimized location aided routing protocol for VANET based on rectangular estimation of position. Applied Sciences, 10(17): 5759. https://doi.org/10.3390/app10175759

[28] Abdali, A.T.A.N., Muniyandi, R.C. (2017). Optimized model for energy aware location aided routing protocol in MANET. International Journal of Applied Engineering Research, 12(14): 4631-4637.

[29] Farheen, N.S., Jain, A. (2022). Improved routing in MANET with optimized multi path routing fine tuned with hybrid modeling. Journal of King Saud University-Computer and Information Sciences, 34(6): 2443-2450. https://doi.org/10.1016/j.jksuci.2020.01.001

[30] Saudi, N.A.M., Arshad, M.A., Buja, A.G., Fadzil, A.F.A., Saidi, R.M. (2019). Mobile Ad-Hoc network (MANET) routing protocols: A performance assessment. In Proceedings of the Third International Conference on Computing, Mathematics and Statistics (iCMS2017) Transcending Boundaries, Embracing Multidisciplinary Diversities, pp. 53-59. https://doi.org/10.1007/978-981-13-7279-7_7

[31] Minh, T.P.T., Nguyen, T.T., Kim, D.S. (2015). Location aided zone routing protocol in mobile Ad-Hoc Networks. In 2015 IEEE 20th Conference on Emerging Technologies & Factory Automation (ETFA), pp. 1-4. https://doi.org/10.1109/ETFA.2015.7301615

[32] Tran, T.N., Nguyen, T.V., Shim, K., An, B. (2020). A game theory based clustering protocol to support multicast routing in cognitive radio mobile Ad-Hoc networks. IEEE Access, 8: 141310-141330. https://doi.org/10.1109/ACCESS.2020.3013644

[33] Farheen, N.S., Jain, A. (2022). Improved routing in MANET with optimized multi path routing fine tuned with hybrid modeling. Journal of King Saud University-Computer and Information Sciences, 34(6): 2443-2450. https://doi.org/10.1016/j.jksuci.2020.01.001

[34] Kanthimathi, S., Prathuri, J.R. (2020). Classification of misbehaving nodes in MANETS using machine learning techniques. In 2020 2nd PhD Colloquium on Ethically Driven Innovation and Technology for Society (PhD EDITS), pp. 1-2. https://doi.org/10.1109/PhDEDITS51180.2020.9315311

[35] Thamizhmaran, K. (2021). Efficient dynamic acknowledgement scheme for manet. Journal of Advanced Research in Embedded System, 7(3 & 4): 1-6.

[36] Elwahsh, H., Gamal, M., Salama, A.A., El-Henawy, I.M. (2018). A novel approach for classifying Manets attacks with a neutrosophic intelligent system based on genetic algorithm. Security and Communication Networks, 2018(1): 5828517. https://doi.org/10.1155/2018/5828517

[37] Choi, Y.J., Seo, J.S., Hong, J.P. (2021). Deep reinforcement learning-based distributed routing algorithm for minimizing end-to-end delay in MANET. Journal of the Korea Institute of Information and Communication Engineering, 25(9): 1267-1270. https://doi.org/10.6109/jkiice.2021.25.9.1267

[38] Khan, M.S., Waris, S., Ali, I., Khan, M.I., Anisi, M.H. (2018). Mitigation of packet loss using data rate adaptation scheme in MANETs. Mobile Networks and Applications, 23: 1141-1150. https://doi.org/10.1007/s11036-016-0780-y, 2018.

[39] Ravi, S., Matheswaran, S., Perumal, U., Sivakumar, S., Palvadi, S.K. (2023). Adaptive trust-based secure and optimal route selection algorithm for MANET using hybrid fuzzy optimization. Peer-to-Peer Networking and Applications, 16(1): 22-34. https://doi.org/10.1007/s12083-022-01351-2

[40] Sathyaraj, P., Rukmani Devi, D. (2021). Designing the routing protocol with secured IoT devices and QoS over Manet using trust-based performance evaluation method. Journal of Ambient Intelligence and Humanized Computing, 12: 6987-6995. https://doi.org/10.1007/s12652-020-02358-4

[41] Hassan, M.H., Mostafa, S.A., Mohammed, M.A., Ibrahim, D.A., Khalaf, B.A., Al-Khaleefa, A.S. (2019). Integrating African Buffalo optimization algorithm in AODV routing protocol for improving the QoS of MANET. Journal of Southwest Jiaotong University, 54(3).

[42] Kalaivanan, S. (2021). Quality of service (QoS) and priority aware models for energy efficient and demand routing procedure in mobile Ad-Hoc networks. Journal of Ambient Intelligence and Humanized Computing, 12: 4019-4026. https://doi.org/10.1007/s12652-020-01769-7

[43] Kumar, S.S. (2019). Minimizing link failure in mobile Ad-Hoc networks through QoS routing. In Innovations in Computer Science and Engineering: Proceedings of the Fifth ICICSE 2017, pp. 241-247. https://doi.org/10.1007/978-981-10-8201-6_27

[44] Murugan, K., Anita, R. (2018). Interlaced link routing and genetic topology control data forwarding for quality aware MANET communication. Wireless Personal Communications, 102: 3323-3341. https://doi.org/10.1007/s11277-018-5370-9

[45] Pandey, P., Singh, R. (2021). Decision factor based modified AODV for improvement of routing performance in MANET. In International Conference on Advanced Network Technologies and Intelligent Computing, pp. 63-72. https://doi.org/10.1007/978-3-030-96040-7_5

[46] Ramani, T., Sengottuvelan, P. (2019). QoS development based on link prediction with time factor for clustering the route optimization and route selection in mobile Ad-Hoc network. International Journal of Recent Technology and Engineering, 7(5S3): 2277-3878.

[47] Mammeri, Z. (2019). Reinforcement learning based routing in networks: Review and classification of approaches. IEEE Access, 7: 55916-55950. https://doi.org/10.1109/ACCESS.2019.2913776

[48] Wang, S., Liu, H., Gomes, P.H., Krishnamachari, B. (2018). Deep reinforcement learning for dynamic multichannel access in wireless networks. IEEE Transactions on Cognitive Communications and Networking, 4(2): 257-265. https://doi.org/10.1109/TCCN.2018.2809722